Many people, myself included, would assume that Blogger, being a Google product, would have innate integration with the Google search engine, and therefore, any post that you write, would just automagically appear in google search results.

But as a famous man once said:

In this post, I'll list the things that prevented Google from indexing my posts, and how to rectify them.

Also, according to this Wikipedia page, Google has over 90% market share. So, we will just be focusing on Google. But the same solutions should apply to other search engine as well.

I was really surprised that Google didn't even know to how discover pages from its own blog product.

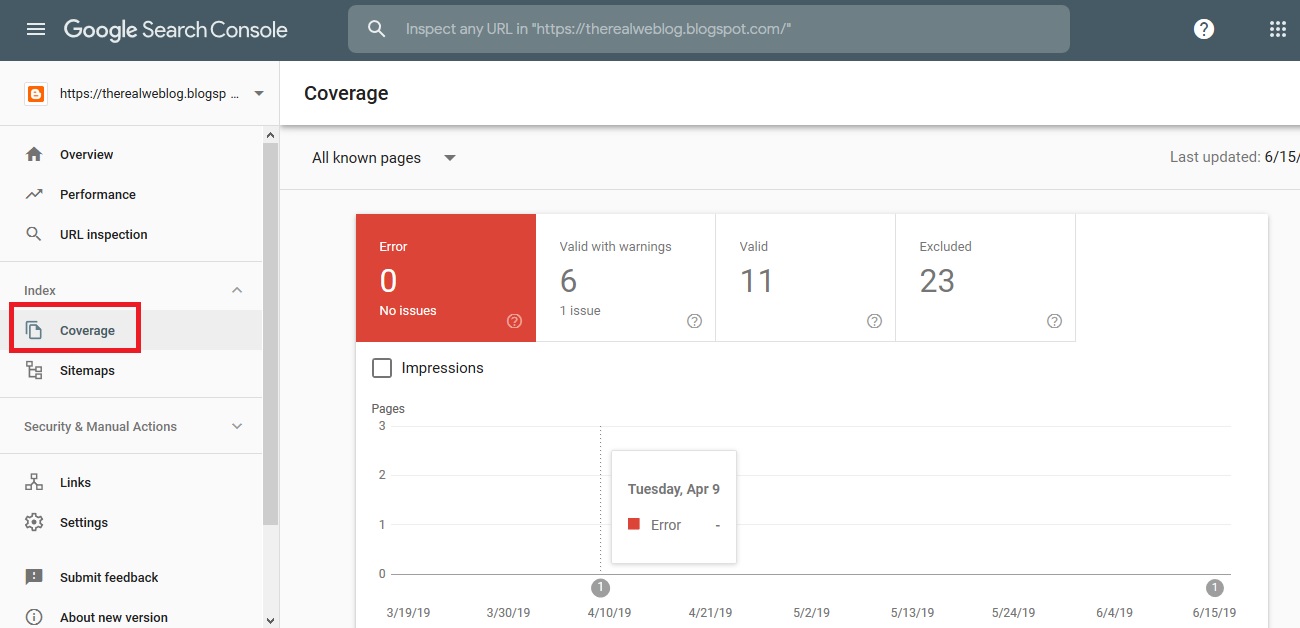

You can check how many of your pages Google has discovered by using the Google Search Console. Then look at Coverage section:

You can also test your site search results by searching

It isn't obviously explained by Blogger, but Blogger actually automatically generates a sitemap for you containing all the pages on your blog at

I guess it isn't automatically submitted to Google Search because some people might not like the auto-generated sitemap.

But if you are like me and have no problem with the auto-generated version, then you can just submit it.

In the Sitemaps section in Google Search Console, enter

It should take Google a few days to discover the pages in your sitemap, and then even longer to crawl and index those pages. So check back in a few.

The good thing here is that Blogger will automatically update this sitemap whenever you make changes to your blog, and Google will automatically check this sitemap for any updates.

However, if you use the labels as a form of navigation, these labels are important for your users and search engines to discover pages on your blog. So, you would want Google to crawl and read the labels search results.

You can find the content of your robots.txt by going to

Then go to Blogger settings and paste in the new robots.txt content.

Under the Settings menu in Blogger, in Search preferences, click the Edit button next to Custom robots.txt.

Choose Yes to Enable custom robots.txt content? and paste in the new robots.txt content in the text box.

When done, save the changes.

Make sure you don't mess up the new robots.txt content or you could mess up your search engine indexing.

If you have more to add, be sure to leave a comment below.

But as a famous man once said:

In this post, I'll list the things that prevented Google from indexing my posts, and how to rectify them.

Also, according to this Wikipedia page, Google has over 90% market share. So, we will just be focusing on Google. But the same solutions should apply to other search engine as well.

Note that you'll need to have Google Search Console set up already to investigate and fix Google Search problems.

Failure of Discovering Pages - Sitemap

Problem - Failure Discovering Pages

The first problem I encountered was that Google didn't know how to navigate my blog, and therefore it only discovered 7 pages.I was really surprised that Google didn't even know to how discover pages from its own blog product.

You can check how many of your pages Google has discovered by using the Google Search Console. Then look at Coverage section:

You can also test your site search results by searching

site:yoursitedomain in Google's search bar.Solution - Sitemap

To remedy this, we need to submit the sitemap to Google in the search console.It isn't obviously explained by Blogger, but Blogger actually automatically generates a sitemap for you containing all the pages on your blog at

yoursitedomain/sitemap.xml.I guess it isn't automatically submitted to Google Search because some people might not like the auto-generated sitemap.

But if you are like me and have no problem with the auto-generated version, then you can just submit it.

sitemap.xml behind your site domain and click the SUBMIT button.It should take Google a few days to discover the pages in your sitemap, and then even longer to crawl and index those pages. So check back in a few.

The good thing here is that Blogger will automatically update this sitemap whenever you make changes to your blog, and Google will automatically check this sitemap for any updates.

Blocked Label Pages - Robots.txt

Problem - Label Pages Blocked

By default, Blogger sets the robots.txt to block the search page in your blog from being crawled by search engines. This includes search results for labels. And since Google respects the instructions set in the robots.txt, it won't crawl those pages.However, if you use the labels as a form of navigation, these labels are important for your users and search engines to discover pages on your blog. So, you would want Google to crawl and read the labels search results.

Solution - Robots.txt

To remedy this, we need to add the following line to the robots.txt:Allow: /search/labelYou can find the content of your robots.txt by going to

yoursitedomain/robots.txt. Copy down the content and then add the above line under Disallow: /search.Then go to Blogger settings and paste in the new robots.txt content.

Under the Settings menu in Blogger, in Search preferences, click the Edit button next to Custom robots.txt.

Choose Yes to Enable custom robots.txt content? and paste in the new robots.txt content in the text box.

When done, save the changes.

Make sure you don't mess up the new robots.txt content or you could mess up your search engine indexing.

End

Above are the issues and solutions I have found in Blogger regarding Google search. I hope it has been helpful.If you have more to add, be sure to leave a comment below.

Comments

Post a Comment